Society public company members across sizes and industries responding to the most recent Society / Deloitte Board Practices Quarterly survey: Artificial intelligence (AI) revisited” provided insights on various aspects of their companies’ artificial intelligence practices, including where in the organization AI resides, use policies/frameworks, risk mitigation measures, education and training, and board oversight. Among the objectives was to understand how the use and governance of AI had evolved since our 2023 report.

Among the takeaways:

Use of AI (n=61) — Companies are most commonly using AI in relation to delivery of services, sales/marketing, and product development, with all areas showing an increase in use compared to our last member survey conducted in May 2023.

Management responsibility (n=61) — A majority of companies task the IT/Tech department or function or a cross-functional working group with primary responsibility or shared primary responsibility for AI matters.

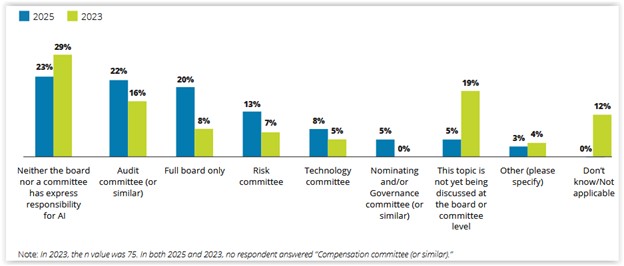

Board oversight structure (n=60) — A slight plurality of respondents—23%—said that neither the board nor a board committee has express responsibility for AI, whereas 22% allocate primary oversight to the audit committee and 20% retain responsibility at the full board level.

AI on the agenda (n=56) — Nearly 60% of respondents reported that AI-related topics are on the board or board committee meeting agenda on an ad hoc or as-needed basis. Notably, just 9% this year vs. 44% in 2023 said that these topics have not appeared on a full board or committee meeting agenda.

Types of AI information provided to the board/committees (n=50) — The vast majority (88%) of boards / board committees regularly receive AI strategy updates (e.g., development and execution of plans to use AI in strategic objectives and functional areas), while more than half receive information on AI risks and strategic risks associated with the company’s use of AI.

Workplace use & policies — Two-thirds of respondents (n=59), compared to just 25% in 2023, said that their company allows AI tools for specific uses. Notably, 70% reported having an AI policy or policies in the current survey (n=54) vs. just 13% of companies reporting an AI use framework, AI policy(ies), or AI code of conduct in 2023.

More than one-third (37%) of companies have revised corporate policies (e.g., privacy, cyber, risk management, records retention) to address the use of IA, while another 31% are currently considering doing so (n=59)

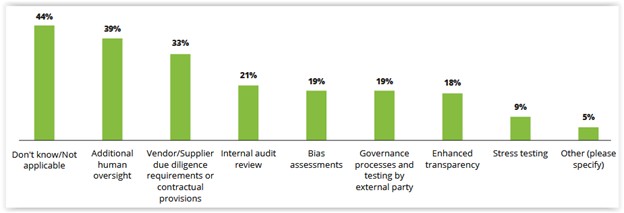

Risk mitigation (n=57) — Those companies that have adopted/implemented risk mitigation measures relative to AI most commonly identified measures involving additional human oversight and vendor/supplier due diligence requirements or contractual provisions.

Education/Training (n=59) — A plurality (42%) of companies provide education/training on AI for employees compared to just 11% of companies that did so in 2023. Board education/training on AI is delivered in house by 17%, by an external advisor/provider by 14%, and via encouraged participation in external AI-focused education programs by another 15%.

Responses tended to vary by company size. Small-cap and private company findings were omitted from the report and the accompanying demographics reports due to limited respondent populations; however, members may access those results by emailing Randi Val Morrison.